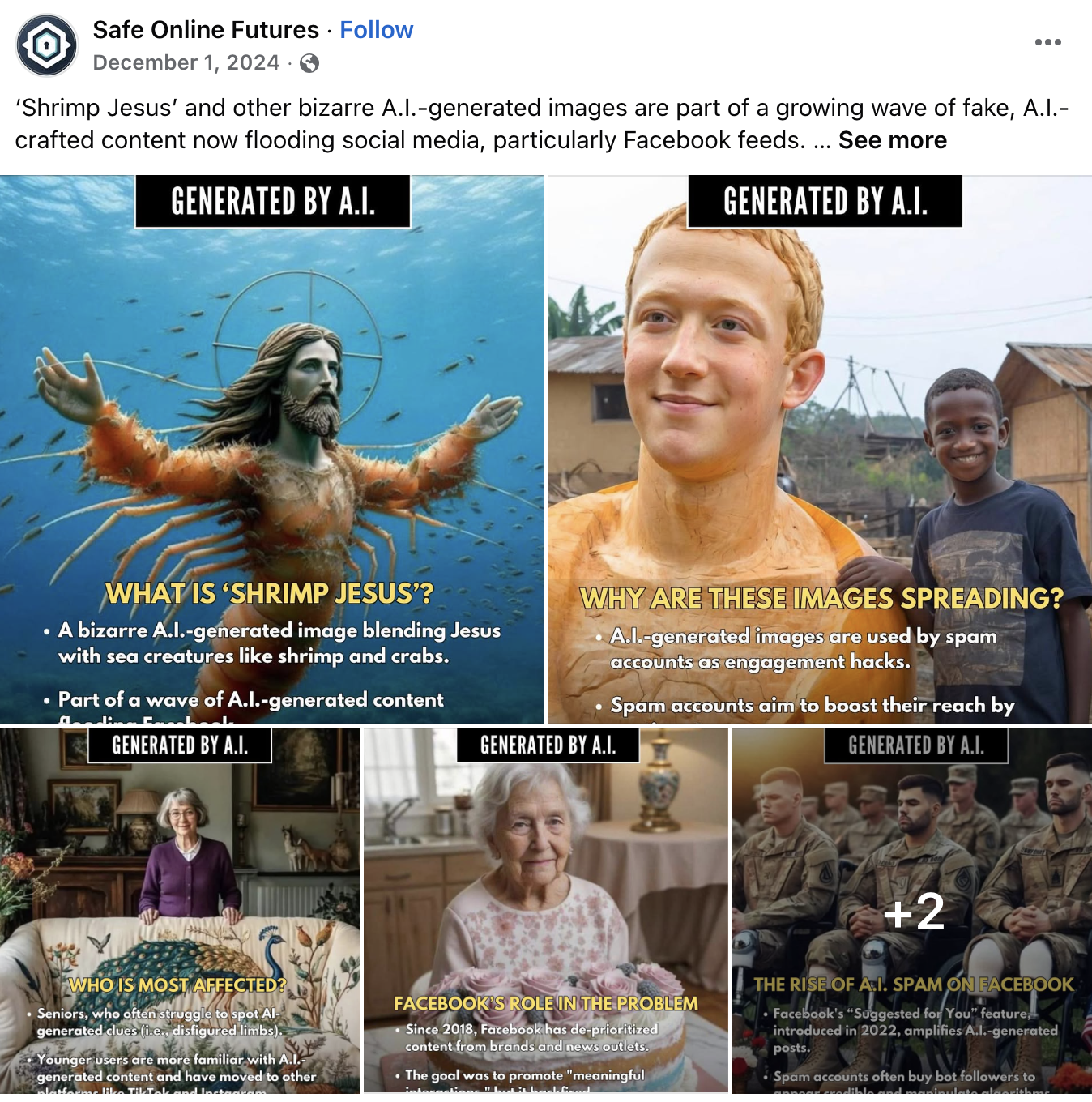

Picture this: You’re scrolling through your Facebook feed (as we all do) and land on an image of Jesus Christ. Made entirely of shrimp. Getting thousands of “Amens” from people who seem genuinely moved by this crustacean saviour.

You don’t even resist the urge to snort-laugh, it’s hilarious, and it’s obviously bullshit… but what about the growing number of people responding with “heart” and “care” emojis, legitimising it and not calling this out? Surely not….

Welcome to the bizarre world of AI slop, and trust me, it’s not as harmless as it sounds.

What Exactly Is AI Slop?

AI slop is the digital equivalent of processed food: cheap, mass-produced content that attempts to present as being appealing at first glance, but offers zero nutritional value. It’s low-quality, generated-in-bulk content created with AI tools, and it’s often absurd, clickbait-y, glitchy and riddled with errors.

The term encompasses everything from photorealistic images that are too good to be true: children holding paintings that look like the work of professional artists, quadruplets celebrating their 110th birthday, or majestic log cabin interiors that are the stuff of Airbnb dreams, through to completely fabricated news articles and academic papers.

But there’s a sad truth: to some extent, it’s working. These kinds of images have received insane levels of engagement on social media platforms, proving that millions of people are falling for this synthetic crap.

The Shrimp Jesus Phenomenon: A Case Study in Digital Deception

When artist Max Arrington first drew Shrimp Jesus, he never dreamed an AI image of Jesus Christ with shrimp-like arms and legs would become a viral internet phenomenon. What started as a joke has morphed into something far more troubling. And for the thinkers in the room, we should be concerned.

The Stanford Internet Observatory studied over 100 Facebook pages posting AI-generated content and found something alarming: Facebook’s algorithm recommended reams of other AI-generated content to users who engaged with these posts, even briefly.

This isn’t an isolated event. What we’ve got going on is a systematic flood of fake content that includes:

- Fake children’s artwork: Images of kids supposedly showing off masterpieces they “made with their own hands”

- Non-existent book recommendations: The Chicago Sun-Times and Philadelphia Inquirer took reputational hits when May 2025 editions featured a special section that included a summer reading list recommending books that don’t exist. Oooops.

- Fabricated academic papers: Researchers citing studies that were never conducted and statistics that can’t be verified.

- Impossible dream homes: Perfect interiors that exist only in AI’s imagination

The Hallucination Crisis is Getting Worse, Not Better

Here’s what should really worry everyone: AI is getting more accurate in some aspects, and at the same time it’s getting increasingly confident about being wrong.

OpenAI’s investigation into its latest GPT o3 and GPT o4-mini large LLMs found they are substantially more prone to hallucinating, or making up false information, than the previous GPT o1 model. The company found that o3, its most powerful system, hallucinated 33 percent of the time when running its PersonQA benchmark test.

Let that sink in for a quick minute. One in three responses from one of the “most advanced” AI models could contain fabricated information presented as fact.

Is this merely a bug, or indications of something more problematic? At least the concerns are being taken seriously, and OpenAI is continually working to minimise hallucinations and improve the accuracy and reliability of its models.

Real-World Consequences: When Fake Content Causes Real Damage

The problem isn’t limited to viral memes and fake videos. AI hallucinations are now having some serious and significant consequences:

Legal Blunders: AI legal expert Damien Charlotin tracks legal decisions in which lawyers have used evidence that featured AI hallucinations. His database indicates finding more than 30 instances in May 2025. Of course, there are likely to be many, many more.

Educational Mistrust: A Texas professor failed his entire class after ChatGPT falsely flagged their essays as AI-generated, when they were written by humans.

Media Mishaps: Marco Buscaglia admitted using AI to assist putting a recommended summer reading list, and failed to fact check the output, resulting in major newspapers recommending books that don’t exist.

Platform Overload: AI-generated “Boring History” videos are flooding YouTube with surface-level, automated content that’s drowning out human-made content created by real anthropologists and historians.

Why This Matters for Your Brand (And It’s Not What You Think)

You might be sitting back thinking, “Well, I’m not creating Shrimp Jesus content, so I’m fine.” But, if so, you’re missing the bigger picture.

AI slop isn’t only having an impact on glaringly fake content, the hit is being felt more widely and what we are facing is the erosion of trust in all digital content. When your potential customers can’t tell what’s real anymore, they become suspicious of everything. And that’s not so good.

Here’s what this means for your brand:

The Trust Recession Is Coming

Social media is now the primary news source for many users around the world, but when half of what they see is potentially fabricated, trust becomes the scarcest commodity online.

Your authentic, thoughtful content now has to compete not just with others in your industry, but with an ocean of synthetic noise designed to exploit attention algorithms.

Quality Becomes Your Competitive Advantage

While everyone else is racing to pump out more content faster, the smart money is on going deeper, not broader. The content ecosystem is being inundated with synthetic noise, so your thoughtfulness and personal insights can be powerful tools.

Audiences are getting better at spotting fake content, which means they’ll reward brands that consistently deliver genuine value and authentic voice.

The Algorithm Problem

When users react to content by adding a like, sharing the post or leaving a comment, bigger things happen. Any reaction signals to the algorithmic curators that perhaps the content should be pushed into the feeds of even more people.

Social platforms are financially incentivised to promote content that generates engagement, regardless of quality or accuracy. This means your carefully prepared, factual content might get buried under AI-generated clickbait.

How to Future-Proof Your Content Strategy

1. Lead with Transparency Don’t just slap a “we use AI” disclaimer in your footer. Be upfront about how you use AI tools and what human oversight looks like in your process.

2. Invest in Fact-Checking Workflows The best way to mitigate the impact of AI hallucinations is to stop them before they happen. Build verification into every step of your content creation process.

3. Double Down on Your Human Voice AI can help with research and first drafts, but your unique perspective, industry experience, and authentic voice are what separate you from the slop.

4. Create Content That Can’t Be Faked Behind-the-scenes content, personal anecdotes, real customer interviews, and original research are harder for AI to replicate convincingly.

5. Educate Your Audience Help your customers become better at spotting AI slop. It builds trust and positions you as a reliable source in an unreliable landscape.

The Bottom Line: Quality Wins in the Long Game

AI slop might be flooding the internet now, but at the same time it’s creating an incredible opportunity for brands willing to commit to quality and authenticity.

While your competitors are cutting corners with crappy AI-generated everything, you can build lasting relationships with an audience that’s hungry for content they can actually trust.

The brands that survive the AI slop invasion won’t be those who can create content fastest, they’ll be the ones whose audiences never have to wonder if what they’re reading is reliable.

Ready to build an AI strategy that enhances rather than replaces your human voice? Subscribe to Hey There Humanoid for regular insights on using AI responsibly while keeping your brand authentically, unmistakably you.